Sensors, Speech and a Data Deluge.

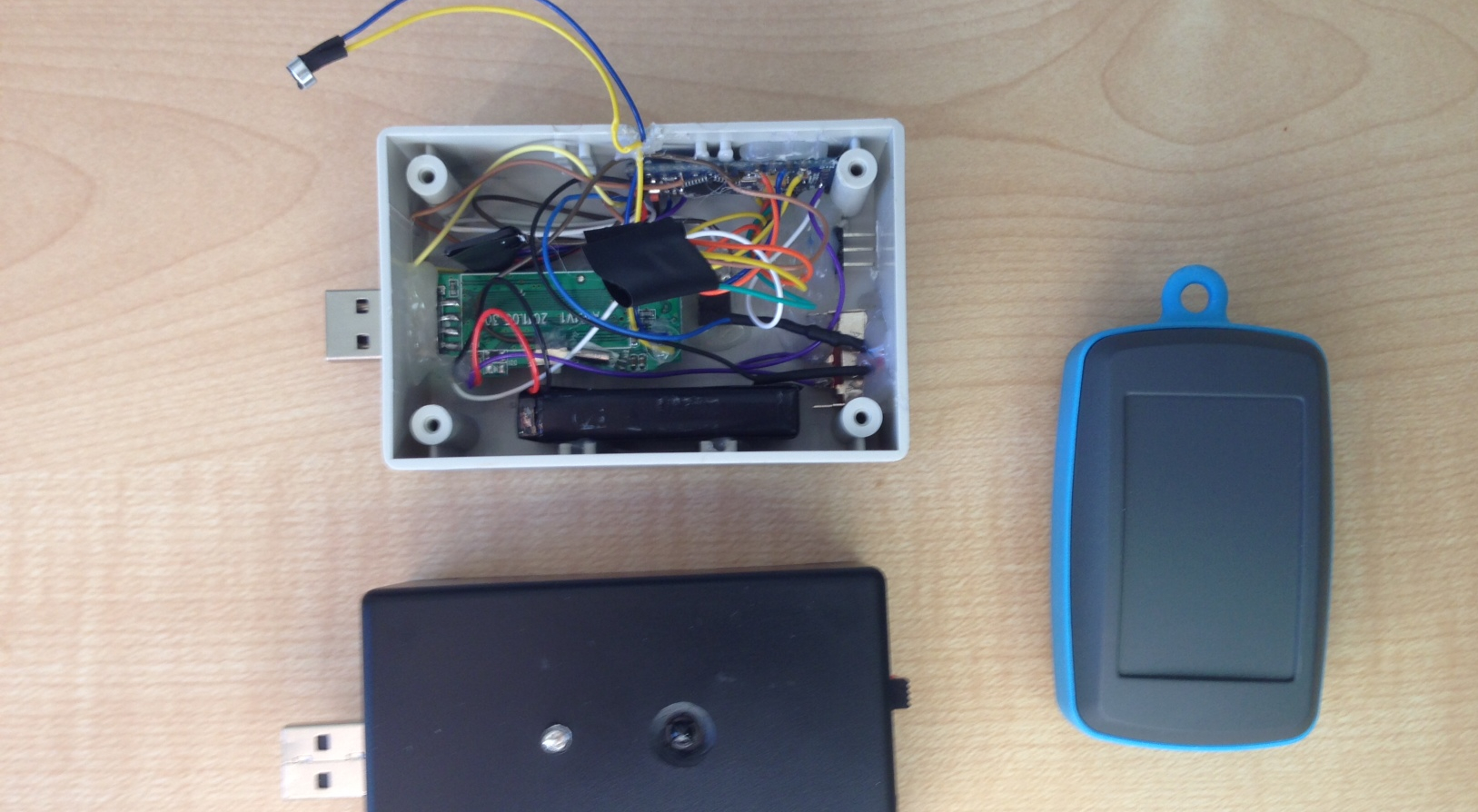

Our prototype wearable speech and proximity sensor.

Our experiments with tracking face to face interaction, developing speech algorithms and building wearables.

“Creativity comes from spontaneous meetings, from random discussions.”

Steve Jobs

In late 2013 / early 2014 I worked with Mike Kruger and a small engineering team to design and test a wearable to track speech, movement and proximity to others. We wanted to help improve team performance and wondered; how do you quantify the clustering of people, casual collisions and serendipitous meetings? How do you track the density of social interactions and its impact on value creation? Especially if you want to track these types of interactions on an individual level with some sort of granularity, and ideally in a passive manner that’s not open to subjective bias and in a somewhat real time manner (rather than post event surveys).

“Density, the clustering of creative people – in cities, regions, and neighborhoods – provides a key spur to innovation and competitiveness.”

Richard Florida

I think we all intuitively know that face to face conversations, social density and spontaneous interactions are enjoyable and incredibly valuable, but in todays data driven world unless we can quantify, compare and correlate these interactions with other metrics that more effectively identify the true value of face to face communication?

With these sorts of questions in mind we where excited to come across the work of Alex Pentland, director of MIT’s Human Dynamics Lab. He had spent many a year looking at human computer interaction and a good part of that tracking human interaction patterns using either smartphones or what he calls a Sociometric Badge – basically a microphone, infrared transmitter, wifi and receiver and accelerometer that hangs around the neck of a person so you can track their speech, movement and with the IR and wifi who they are in close physical proximity too.

The badges and mobile apps allow realtime tracking not only of the spontaneous meetings and random discussions, but also the energy and engagement of the conversation as measured by metrics around the volume and tone of speech. Additionally the device’s infrared sensor passively tracks the “brief and passive contacts made going to and from home or walking about the neighborhood”, the type of interactions post war MIT psychologists Festinger, Schachter, and Back found are key to forming friendships. These individual features can then be aggregated to quantify the social density and clustering of people around time and place.

Our first rough prototype, with the largest case we could possibly find.

We were pretty impressed with some of the outcomes they had from tracking social interactions and speech, so in late 2013 we started working on building out a similar device for running our own experiments. Working with two electronic engineers we designed a simplified speech and proximity sensor.

Shortly after we got an invite to attend interviews for YCombinator’s Winter14 round. At that point it was just a bundle of cords so we packaged up the electronics in a box of sorts.

A few months later in January 2014 we were really happy to be plugging in our first set of thirty speech and proximity sensors. This set looked a lot sexier and included a microphone, infrared transmitter and receiver, along with storage for both. The device produced two files: a wav file of audio, and infrared data (with ID of other device seen) in a csv format.

Our second prototype with an improved 3D printed case.

Speech Identification

Alongside the hardware our CTO Mike worked on several algorithms for analysing the audio. This bundle of algorithms clean the audio of background non-verbal noise, then spot the human speech within all the sounds, breaks the speech into chunks of speech and identifies patterns within the speech to determine how many different speakers there are and at what point they spoke. We also have another algorithm that matches these ‘speakers’ against a voice print so we can know who is who – to do this we need a bit of training data on that person and have been collecting 30 second samples to train the algorithm on. We then have another set to work out colocation – who is speaking to who, who is a part of the conversation and over what duration. To do this we compare the wavelengths across the recordings from each separate device, and work out the likelihood that the two devices are recording the same sound all be it at different volumes (due to differing proximity to the sound source).

From this we extract a range of individual metrics for that person ie. tone, volume, variation, speaking time, listening time, with who, for how long, etc and then aggregate them up to create network graphs and other funky visualisations.

Pilots

We ran two technology pilots, one with Startup Weekend here in Perth and another with Australia’s top tech accelerator, Startmate, in Sydney.

At Startup Weekend there was about 77 participants who then formed ten teams and worked on their app ideas from 7pm Friday night to around 6pm Sunday. We were able to track 29 of these participants with the wearable sensor across 5 teams. The remaining participants we recorded via desktop mics that sat in the middle of each team table. We also recorded all the pitches both before the weekend and the final pitches to the judges. Our intention with the Startmate pilot was to compare the aggregated team interaction patterns to performance in the competition as measured by the judges scores at the end of the three days.

Startmate, the tech accelerator was a similar situation, but there was only around 30 people in the 2014 batch. We had half the cohort wearing the sensors while the other half we’re out of the building selling. The numbers on this pilot weren’t great for statistical purposes but it was a good opportunity to see how the device worked in the wild. We also recorded all the companies pitching so we built up a good bank of audio recordings on people pitching/selling ideas and others ‘buying’ or not. A great corpus to compare against speaking traits.

conclusion

In the end we closed this R&D project as we faced several critical challenges:

- The privacy issues with tracking speech made it difficult to get beyond and R&D experiment.

- Further developing, miniaturising and then producing large batches of the hardware would require large amounts of capital.

- The privacy and usability issues of the device and data captured made us think it would be a near impossible business to scale.